Microsoft has unveiled a new vision for edge computing networks

By Max BurkhalterMay 8, 2018

In traditional network setups, the vast majority of data processing was done at a central location. Sensors would retrieve the data, it would flow through some kind of connection (wired or wireless) until it arrived at equipment with actual computing power. While this method is still fine, it has potential drawbacks.

For instance, a downed connection spells disaster as the data flow is interrupted. If an organization was waiting on data from its remote branches to formulate an annual review, this information could be delayed or, worse, corrupted/fragmented through ineffective network connections.

Edge computing aims to improve this system. Through it, cloud platforms can be optimized, since more data processing is done at the source - or edge - of networks. This greater reduces the amount of bandwidth needed to funnel information from the sensor to the central computer processor. Analytics are done on-site, and then the conclusion is relayed.

With edge computing, data can survive a network failure. If connection is temporarily lost, the sensor device will simply store its calculations until connectivity can be reestablished. Edge computing is an innovation currently at the forefront of technological infrastructure, and Microsoft has recently announced plans to push the boundaries of this system further.

"Microsoft will open source aspects of Azure IoT Edge to make them more modifiable."

Azure IoT Edge

At the company's annual Build developer conference, which this year took place May 7 - 9 in Seattle, Washington, Microsoft demonstrated what its edge and IoT offerings could bring to the creator community. One of its main focus points was Azure IoT Edge. Azure is a name that has long been synonymous with Microsoft cloud solutions.

While Azure IoT Edge had already been revealed, Microsoft went further during Build's first day. The company announced that open-sourcing the Azure IoT Edge runtime, which is the assortment of apps and software that must be installed on a device before it can join the Azure IoT Edge Network. According to ZDNet, the move is intended to attract more developers to the platform by making it easier to modify the software and run debugs.

Cognitive computing meets edge

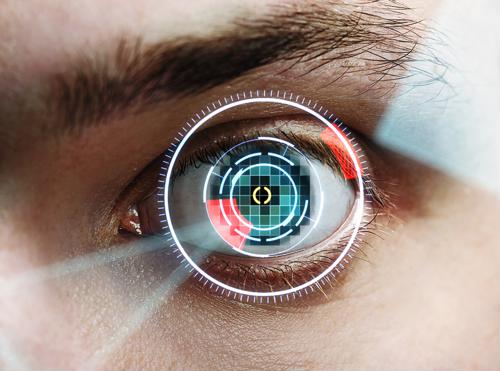

In addition to opening up development on its Azure IoT Edge solution, Microsoft also revealed that it was, in simple terms, going to make its network smarter. The company will bring its Custom Vision cognitive service to Azure IoT Edge. With this upgrade, devices like drones and autonomous vehicles can still execute vision-dependent tasks, even when disconnected from the larger online network.

This move is part of a larger effort, one which will see more of Microsoft's cognitive software heading to Azure IoT Edge. The goal is simple: Make machines more self-sufficient when it comes to performing complex tasks. It is easy to see this program influencing the manufacturing space, which is increasingly dependent on advanced robotics and autonomous industrial machinery.

Microsoft also announced that it has partnered with Qualcomm to create a vision AI developer kit that will run Azure IoT Edge. According to Qualcomm, this software will enable the creation of advanced IoT solutions for smart home devices and security cameras. Home assistants can also benefit from this technology. Developers using this DK can bring advanced AI capabilities to these types of hardware, which will be managed centrally by Azure.

Like the company's efforts with its in-house Custom Vision cognitive service, the goal here is to enable these on-premise devices to perform better and understand more data. Processing complex inputs like vision in these small pieces of hardware is extremely difficult without the presence of AI and machine learning technology.

Project Kinect for Azure

When Microsoft first unveiled the Kinect for its home console Xbox devices, the hardware was largely a commercial failure, one that has since been discontinued. However, the company is not abandoning its Kinect sensor technology. Reported on LinkedIn by Microsoft Technical Fellow Alex Kipman, Project Kinect for Azure aims to bring AI cognitive perception to a host of edge devices.

Kinect sensors bring with them a wealth of impressive features, including a 1024x1024 megapixel resolution, high modulation contrast and frequency (which will allow the device to save power), dynamic range that can understand objects at various distances and a multiphase depth calculation ability that will allow the device to "see" accurately, even amidst external disruptive forces.

The Kinect was designed to see and understand people, along with their precise movements. Giving this kind of sensor to Azure IoT Edge devices will enable cognitive computing with virtually any sensor. It can push the understanding gained from edge networks to exciting new heights.

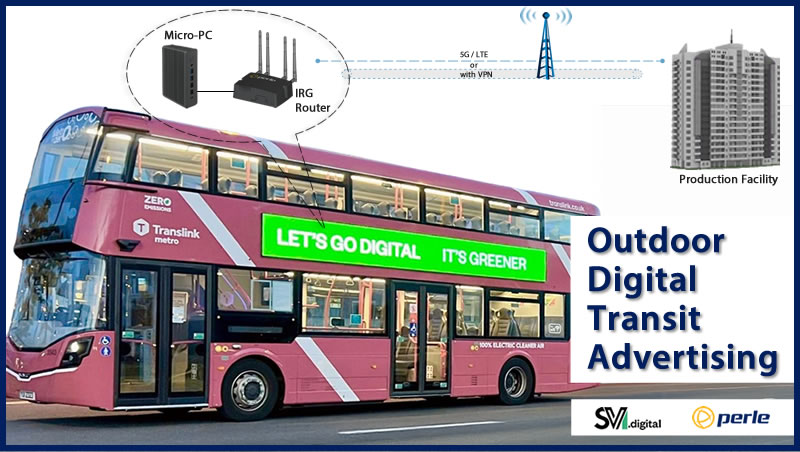

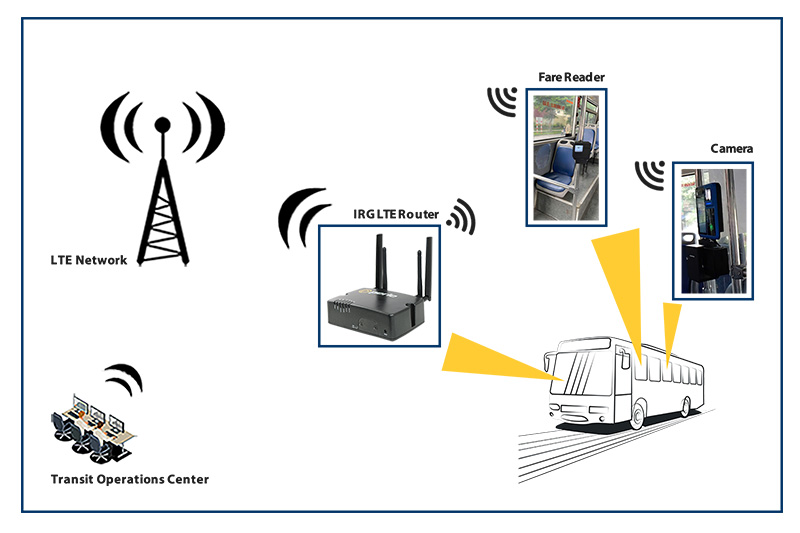

Of course, while edge computing takes the emphasis away from a centralized hub, robust network infrastructure is still vital to undisrupted productivity. Perle has the personnel and hardware to improve your network connections, allowing you to more effectively use technology like Microsoft's Azure IoT Edge platform. Contact Perle today to learn how we can help optimize your organization for innovative edge solutions.